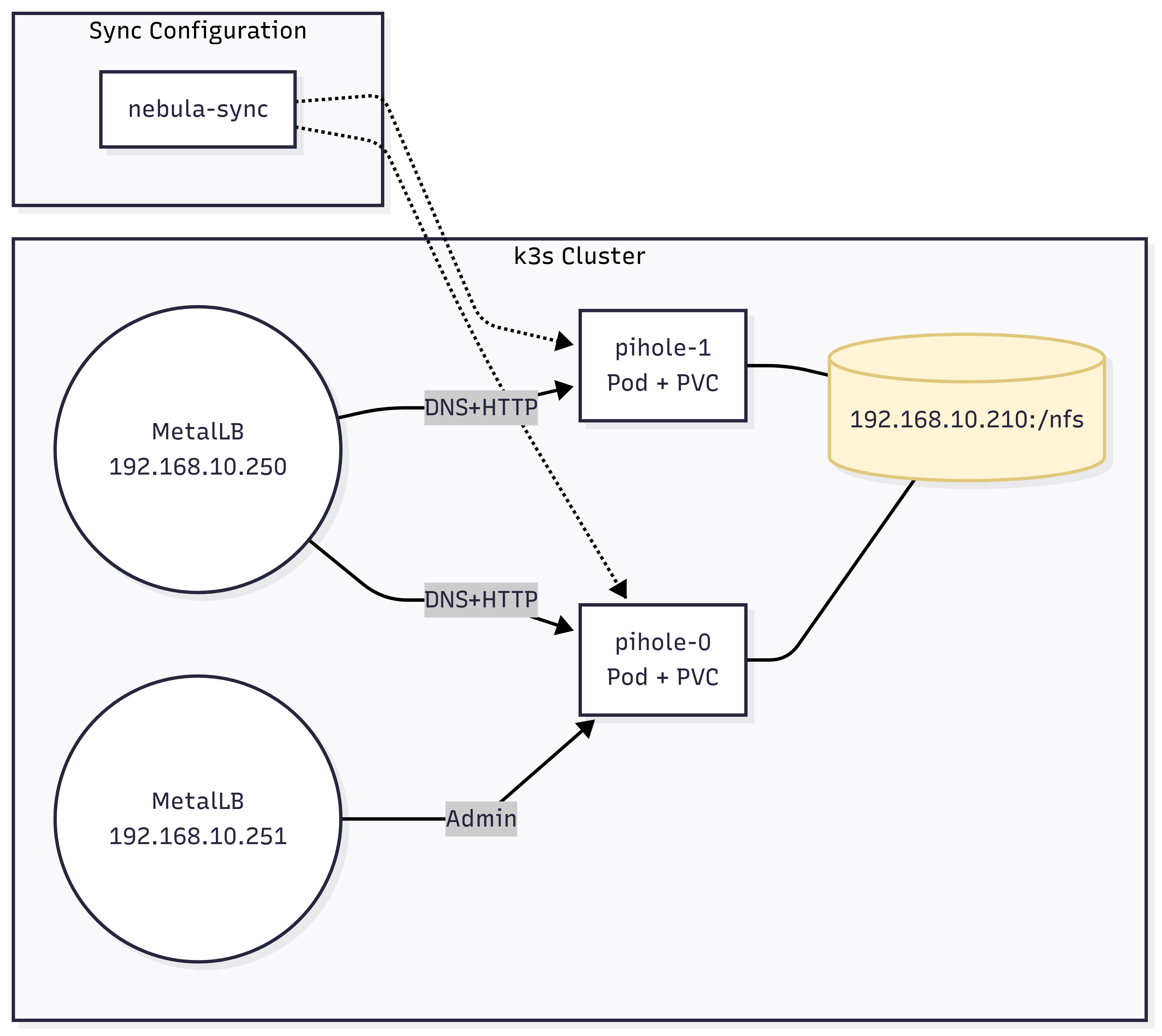

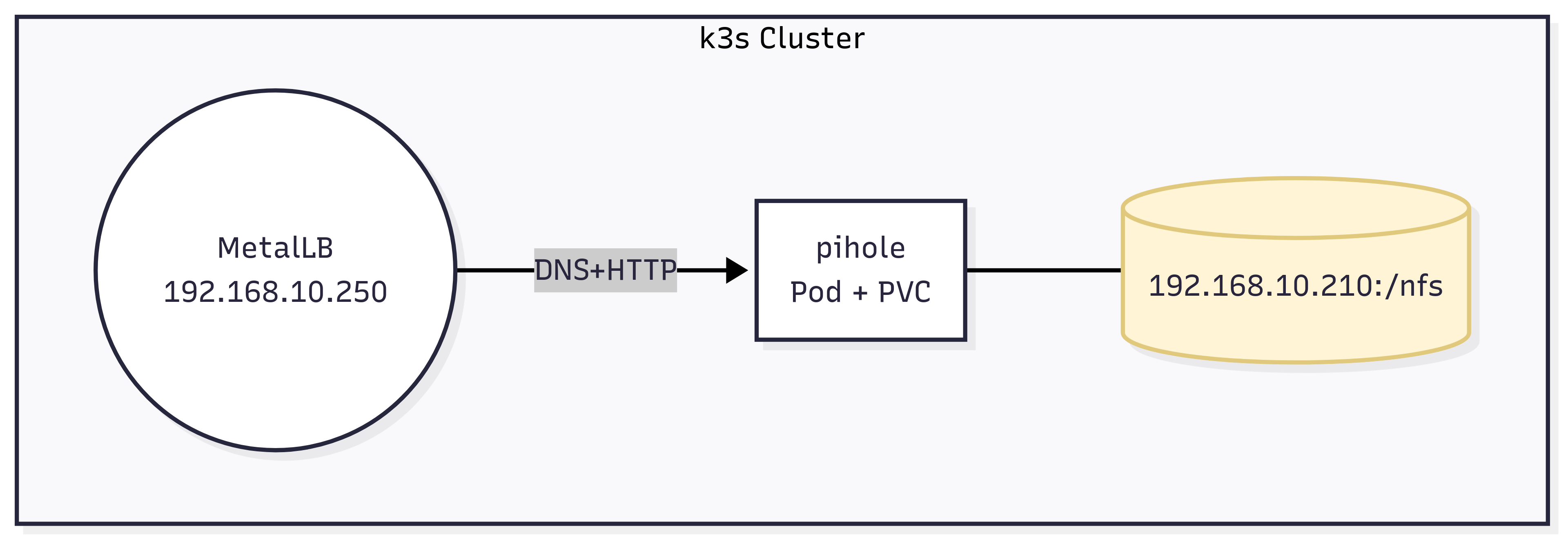

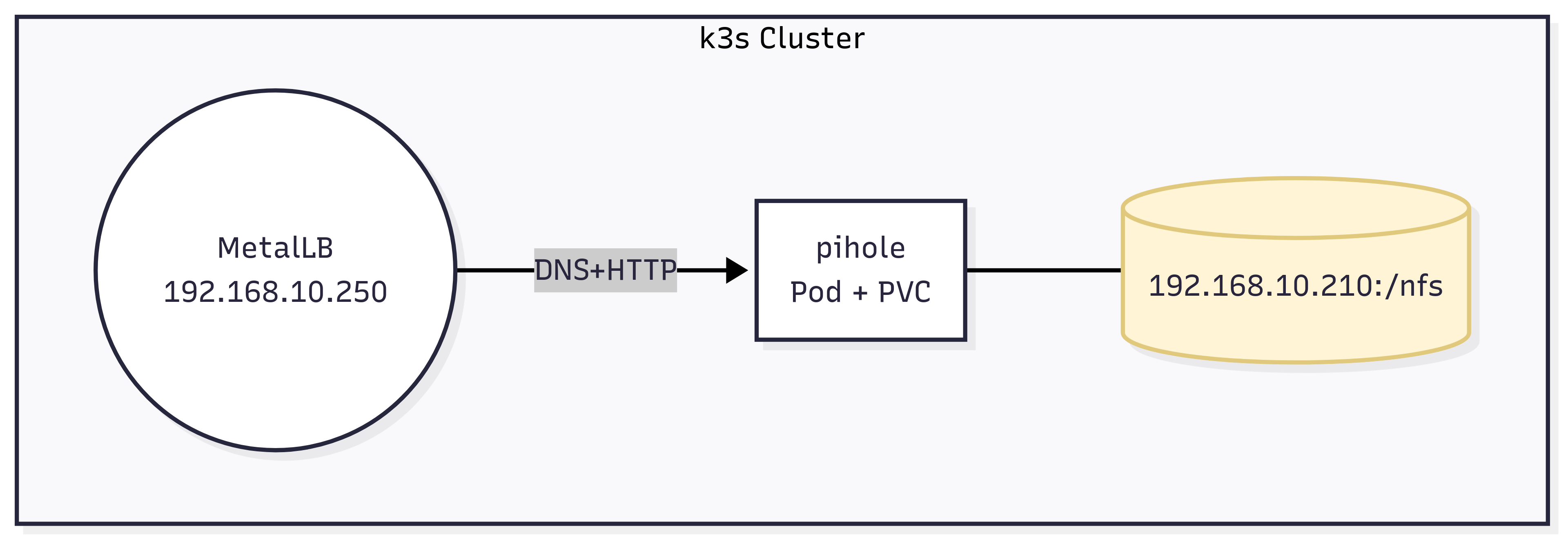

Since I put Pi-hole into service it has been the DNS server for the whole house — every device relies on it to resolve names before it can reach the internet. The diagram below shows the original, single-instance layout:

Related configuration notes:

Related configuration notes:

The setup is easy to deploy and works fine most of the time — except for the moments when Pi-hole crashes and the entire network instantly goes dark.

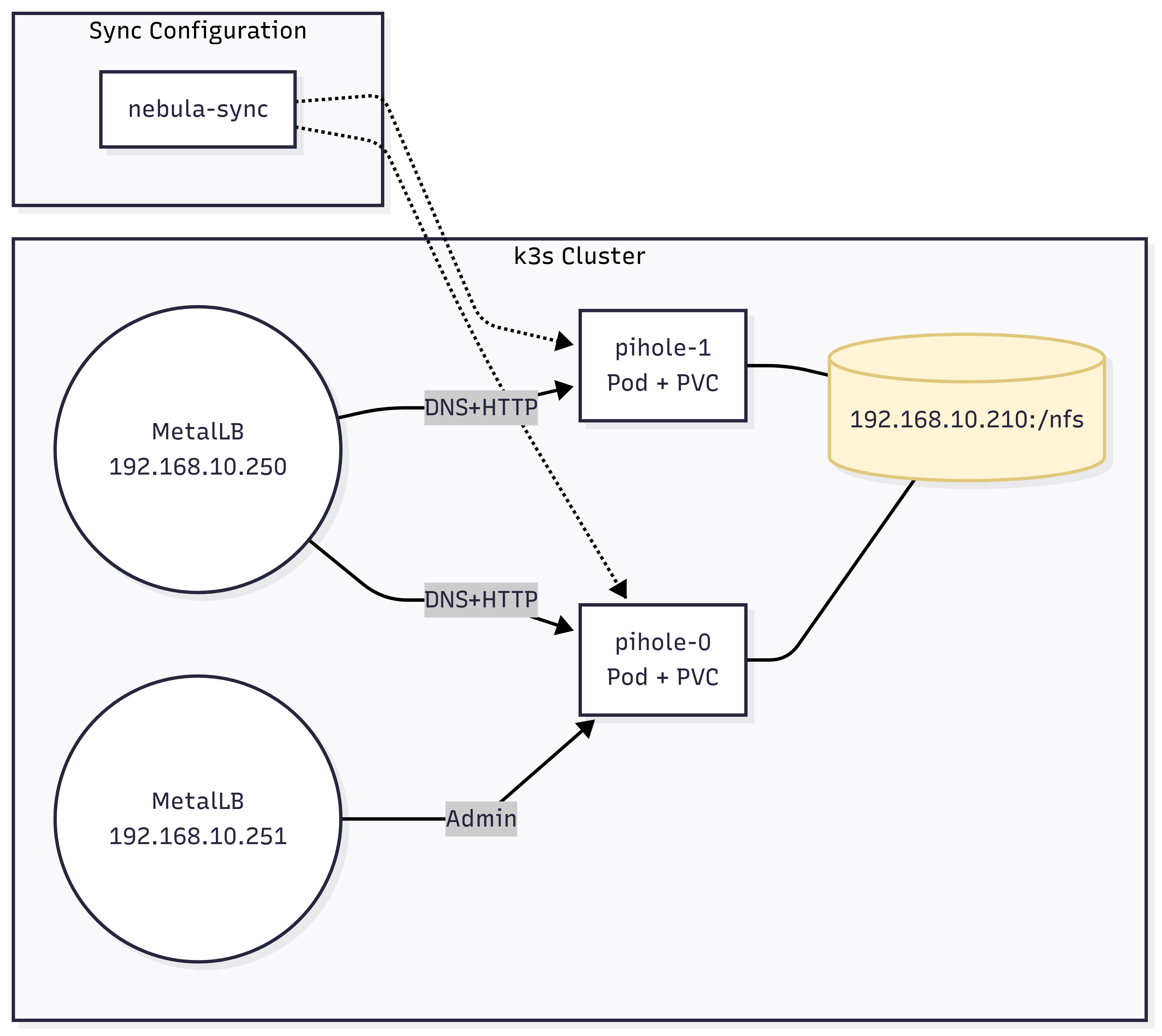

The obvious fix is to add a replica, so if one instance dies the other one can keep answering queries. That change, however, has ripple effects:

Pi-hole stores everything in SQLite. Two processes trying to write the same database will corrupt the lock in no time.

The safe approach is “one Pod, one PVC”, and the cleanest way to get numbered PVCs is to switch from a Deployment to a StatefulSet.

Once there are two separate PVCs you need something to keep the configurations in sync.

nebula-sync handles primary → replica sync with almost zero effort.

Primary/replica implies you must be sure which instance you are talking to.

The single LoadBalancer IP (192.168.10.250) is no longer enough — you can’t guarantee that it lands on the primary.

The simple fix is to give the primary its own LB IP (192.168.10.251) and use that address for the web UI and all configuration changes.

Put together, the new architecture looks like this:

Dynamic NFS Provisioner#

Until now every PersistentVolume had to be handwritten and then matched with a PVC — pure boiler-plate.

The nicer way is to install an external provisioner for the k3s cluster and create a StorageClass with a provisioner: field.

Whenever a new PVC asks for that class, the provisioner automatically

- creates a sub-directory on the NFS share,

- generates the PV,

- binds it to the PVC

— exactly what Kubernetes calls Dynamic Volume Provisioning.

1

2

3

4

5

6

7

| helm repo add nfs https://kubernetes-sigs.github.io/nfs-subdir-external-provisioner

helm upgrade --install nfs-provisioner nfs/nfs-subdir-external-provisioner \

-n nfs-provisioner --create-namespace \

--set nfs.server=192.168.10.210 \

--set nfs.path=/nfs \

--set storageClass.name=nfs-dynamic \

--set storageClass.pathPattern='${.PVC.namespace}-${.PVC.name}'

|

pathPattern is set to <namespace>-<pvcName>, so the folders look like

/nfs/

├── pihole-pihole-config-0/

├── pihole-pihole-config-1/

└── grafana-grafana-data/

A quick verification

1

2

| kubectl get sc nfs-dynamic

kubectl get pods -n nfs-provisioner

|

ConfigMap#

Next step: copy over the old ConfigMap with our custom 02-custom.conf, addn-hosts, and CNAME rules.

1

2

3

4

5

6

7

8

9

10

11

12

13

| apiVersion: v1

kind: ConfigMap

metadata:

name: pihole-custom-dnsmasq

namespace: pihole

labels:

app: pihole

data:

02-custom.conf: |

addn-hosts=/etc/addn-hosts

dhcp-option=6,192.168.10.250

addn-hosts: |

05-pihole-custom-cname.conf: |

|

StatefulSet#

A few notes:

- Uses

podAntiAffinity to prefer scheduling the two Pi-hole Pods on different nodes (nice-to-have, not mandatory). - Readiness probes

53/TCP instead of the admin page because FTL might be dead while the web service is still holding up. - Re-uses the existing

pihole-admin Secret previously created.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

| apiVersion: apps/v1

kind: StatefulSet

metadata:

name: pihole

namespace: pihole

spec:

serviceName: pihole-headless

replicas: 2

selector:

matchLabels: {app: pihole}

template:

metadata:

labels: {app: pihole}

spec:

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchLabels: {app: pihole}

topologyKey: kubernetes.io/hostname

containers:

- name: pihole

image: ghcr.io/pi-hole/pihole:2025.04.0

env:

- {name: TZ, value: America/Los_Angeles}

- {name: VIRTUAL_HOST, value: pi.hole}

- {name: FTLCONF_dns_listeningMode, value: "all"}

- {name: FTLCONF_dns_upstreams, value: "1.1.1.1;8.8.8.8"}

- {name: FTLCONF_misc_etc_dnsmasq_d, value: "true"}

- name: FTLCONF_webserver_api_password

valueFrom:

secretKeyRef:

key: password

name: pihole-admin

ports:

- {containerPort: 80, name: http}

- {containerPort: 53, name: dns-udp, protocol: UDP}

- {containerPort: 53, name: dns-tcp, protocol: TCP}

readinessProbe:

tcpSocket:

port: 53

initialDelaySeconds: 15

periodSeconds: 10

timeoutSeconds: 3

failureThreshold: 3

livenessProbe:

httpGet:

path: /admin

port: http

scheme: HTTP

initialDelaySeconds: 60

periodSeconds: 30

timeoutSeconds: 5

failureThreshold: 6

volumeMounts:

- {name: pihole-config, mountPath: /etc/pihole}

- {name: dnsmasq-config, mountPath: /etc/dnsmasq.d}

- {name: custom-dnsmasq, mountPath: /etc/dnsmasq.d/02-custom.conf, subPath: 02-custom.conf}

- {name: custom-dnsmasq, mountPath: /etc/addn-hosts, subPath: addn-hosts}

volumes:

- name: custom-dnsmasq

configMap:

name: pihole-custom-dnsmasq

defaultMode: 420

volumeClaimTemplates:

- metadata: {name: pihole-config}

spec:

accessModes: ["ReadWriteOnce"]

storageClassName: nfs-dynamic

resources: {requests: {storage: 1Gi}}

- metadata: {name: dnsmasq-config}

spec:

accessModes: ["ReadWriteOnce"]

storageClassName: nfs-dynamic

resources: {requests: {storage: 256Mi}}

|

Service#

Because home traffic is light I keep externalTrafficPolicy: Local.

That means only one Pod advertises the 192.168.10.250; if it dies MetalLB moves the IP to the other Pod.

Bonus: one less kube-proxy hop during normal operation.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

| apiVersion: v1

kind: Service

metadata:

name: pihole-headless

namespace: pihole

spec:

clusterIP: None

selector: {app: pihole}

ports:

- {port: 80, targetPort: 80, name: http}

---

# DNS

apiVersion: v1

kind: Service

metadata:

name: pihole-lb

namespace: pihole

annotations:

metallb.universe.tf/allow-shared-ip: pihole

metallb.universe.tf/address-pool: local-pool

spec:

type: LoadBalancer

loadBalancerIP: 192.168.10.250

selector: {app: pihole}

externalTrafficPolicy: Local

ports:

- {name: http, port: 80, targetPort: 80}

- {name: dns-udp, port: 53, targetPort: 53, protocol: UDP}

- {name: dns-tcp, port: 53, targetPort: 53, protocol: TCP}

---

# Admin Web Service — only for pihole-0

apiVersion: v1

kind: Service

metadata:

name: pihole-admin

namespace: pihole

annotations:

metallb.universe.tf/address-pool: local-pool

spec:

type: LoadBalancer

loadBalancerIP: 192.168.10.251

externalTrafficPolicy: Local

selector:

statefulset.kubernetes.io/pod-name: pihole-0

ports:

- {name: http, port: 80, targetPort: 80}

|

After the rollout you can reach the web UI at 192.168.10.250 and 192.168.10.251, and DNS queries resolve as expected.

The last missing piece is keeping the two configs in sync.

nebula-sync#

nebula-sync is a community project for Pi-hole v6 that uses the new REST / Teleporter API to copy everything you care about from the primary to any number of replicas.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

| apiVersion: apps/v1

kind: Deployment

metadata:

name: nebula-sync

namespace: pihole

spec:

replicas: 1

selector: {matchLabels: {app: nebula-sync}}

template:

metadata: {labels: {app: nebula-sync}}

spec:

containers:

- name: nebula-sync

image: ghcr.io/lovelaze/nebula-sync:v0.11.0

env:

- name: PIHOLE_PASS

valueFrom:

secretKeyRef:

name: pihole-admin

key: password

- name: PRIMARY

value: "http://pihole-0.pihole-headless.pihole.svc.cluster.local|$(PIHOLE_PASS)"

- name: REPLICAS

value: "http://pihole-1.pihole-headless.pihole.svc.cluster.local|$(PIHOLE_PASS)"

- {name: TZ, value: America/Los_Angeles}

- {name: FULL_SYNC, value: "true"}

- {name: RUN_GRAVITY, value: "true"}

- {name: CRON, value: "*/2 * * * *"}

resources:

requests: {cpu: 20m, memory: 32Mi}

|

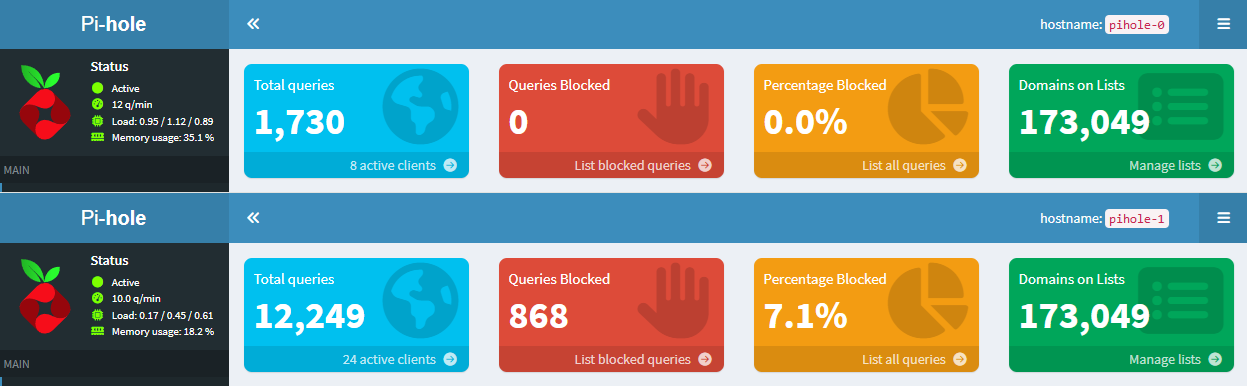

With that in place we now have two Pi-hole v6 instances in active-standby, each on its own dynamic NFS volume, with automatic configuration sync — no more DNS outages.

Related configuration notes:

Related configuration notes: